Vol. 40 (Number 39) Year 2019. Page 23

Vol. 40 (Number 39) Year 2019. Page 23

DELBURY, Perrine A. 1

Received: 19/06/2019 • Approved: 05/11/2019 • Published 11/11/2019

ABSTRACT: This article analyzes the weekly competency-based evaluation performance of 140 French high school sophomores in the Life and Earth Sciences class during an entire school year. The collected data was used to correlate progress with individual and group characteristics. Key competency categories were compared to establish if there is progress. It was found that the general progress in key competencies acquirement is negative for the majority of students. The feasibility of a competency-based evaluation unless investigation focuses competency acquirement is discussed. |

RESUMEN: Este artículo analiza el rendimiento semanal de 140 estudiantes de secundaria en un liceo francés en la clase de ciencia durante un año escolar. Los datos se usaron para correlacionar el progreso con características individuales y grupales. Las categorías de competencias fueron comparadas para establecer si hubo progreso. La adquisición de competencias de la mayoría de los estudiantes evaluados fue negativa. Se discute la factibilidad de esta forma de evaluación, a menos que la investigación se enfoque sobre el aprendizaje de las competencias. |

It is believed that globalization and modernization are creating an interconnected world that expects individuals to use a wide range of competencies in order to face its complex challenges. One of the challenges underpinning assessment is defining the term ‘competency’. Watson et al. (2002) suggests that competency is a nebulous concept defined in different ways by different people. The term “competency” is defined according to two main currents in the literature, one referring to the results of training or competent performance (the outputs), and the other referring to the attributes required of a person to achieve competent performance (the inputs) (Hoffman, 1999). Weinert (2001) says it is the combination of skills, knowledge, attitudes, values and abilities needed to solve specific problems, associated with motivational, volitional and social dispositions for using these skills and abilities in variable situations and that underpin effective and/or superior performance in a profession/occupational area. According to the Organization for Economic Co-operation and Development’s definition and selection of key competencies project, “a competency is more than just knowledge and skills (for), it involves the ability to meet complex demands by drawing on and mobilizing psychosocial resources (including skills and attitudes) in a particular context” (OECD, 2005, p.7). Rey (2012) describes it as « knowing how to act ». In spite of its multiple definitions, it is internationally accepted as the new focus of the education of the XXI century, and school should be the place that prepares students to life complex problems (Bertemes, 2012). It should be more about training students to resolve life problems they are not prepared for, and less about traditional evaluations they are prepared for (Bertemes, 2012). To answer this challenge, competency-based learning models arrived to the educational field to meet each student where they are and lead them to achieve success in postsecondary education and at an ever-changing workplace and in civic life.

Yet, confusion often arises over the use of the term to indicate either capacities in an individual, or skills, abilities, content knowledge, attitudes to acquire as an element of a life role or occupation (Rey et al., 2006). Its relationship to other concepts such as ‘capability’, ‘performance’, ‘ability’, ‘skills’ and ‘expertise’ is unclear. Such abstract definition is far from practical implementation. To address this problem, competency education models have been constructed based on frameworks of key categories of competencies. In 2006, the French Ministry of Education adopted the “Common Core of Knowledge and Competencies” (French Ministry of Education [MEN], 2006) as the legal reference for all actors of the school system to ensure the quality of education and where competency is “(…) conceived as a mix of fundamental contemporary knowledge, skills to implement in a diversity of settings and necessary attitudes (…)”.

The key competencies are mastery of the French language; fluency in a foreign language; mastery of information and communication technologies; mastery of humanistic culture; mastery of social and civic competencies; and finally, ability to be autonomous and to make decision. Each category (for example, mastery of the French language) is declined in domains (for example, reading, writing, reciting,…), and each domain in items (for example, identifying the meaning of an instruction).

Though it provided details and clarified the complexity of its definition, the issue of its evaluation raises as much concern (Janichon, 2014). Rey (2012) states that evaluating competencies is like « observing students in action ». To be effective, competency evaluations should establish a basis for standards and, in this sense, act as a link between the abstract definitions and concrete explicit, measurable, transferable learning objectives that empower students for students. Perrenoud (2015) points out at two conditions for skills and competencies evaluations: first, the resources needed to resolve the task have to be either acquired or available at all time to students and second, the degree of complexity of the tasks have to be even.

In other words, a student is considered competent when he or she can resolve a task using resources and previously acquired skills and knowledge in unfamiliar contexts. For teachers, it translates into having to design a set of learning situations related to the construction of content knowledge and articulating skills and attitudes, a pedagogical method named complex task.

Complex tasks do not fragment problems into a list of isolated questions and its context must be part of the problem solution. It should give students the opportunity to concentrate on what is expected of them as a competency instead of being passively exposed to content knowledge restitution, engaging them actively in the evaluation (Rey, 2012). It aims to transform students’ cognition organization through a better understanding of how knowledge and skills are incorporated and operationalized in a wide range of contexts instead of being repeated and applied in similar conditions of its acquirement (Rey et al., 2006).

In the teachers’ lounge of Saphyre River High School (the name was changed), the evaluation of skills and attitudes is a source of concern. Historically, grading system has been the system of choice to evaluate students’ learning of recently-taught content knowledge. Yet, it is well-accepted that grading is associated to subjective judgements having an exaggerated impact on the orientation and the future of students and that the process of evaluating need to better serve the learning process. Nevertheless, the evaluation of complex task poses two interrogations in terms of the traditional didactic contract between students and teachers: if a complex task’ objective is to incite students to use a mix of knowledge, skills and attitudes, can items of each respective domain be evaluated separately, i.e. can skill-related items and attitude-related items be treated as if they were independent from content knowledge? ; and as a consequence, is it possible to train skill-related and attitude-related items? This last question was simply put on these terms in Saphyre River High School teachers’ lounge: “are skills and attitudes like “bike riding” (once learned, always mastered) …or is it like “pig weighing” (it’s not because you weigh the pig daily that it gains weight)?”

This study focuses on skill-related categories in a Life and Earth Sciences high school class. It hypotheses that skill-related items can be evaluated separately from content knowledge and that it is possible to show improvement after weeks of skill training. By means of a competency-based evaluation model, it aims to establish if 140 high school sophomores actually register progression in skill-related items during the year.

The following questions are consequently addressed:

(i) Do students globally improve their scientific skills and competencies over time or with practice?

(ii) Do students improve in all skill categories or in specific ones?

(iii) Which students’ characteristics (gender, scientific profile, and team work) would likely have an impact on a tendency to improve and inversely?

And finally,

(iv) Which groups’ characteristics (grade of homogeneity, scientific profile, and team work) would likely have an impact on a tendency to improve and inversely?

The hypothesis here leans toward the “bike-riding” tendency, i.e., after a certain period of practice, there should be more skills and competencies acquired at the end of the year than at the beginning of the year.

School science education looks to the development of mental processes so that students can experience and understand the manner in which scientific understanding is built, as well as the nature of scientific enquiry and knowledge. In the French educational framework, competencies are considered necessary to engage in the scientific approach, such as planning and setting up experimental situations, taking measurements and making observations using appropriate instruments, etc, as well as responsible attitudes towards health and environment, in general, and content knowledge (MEN, 2011).

The relevance of this study has at its source the fact that competency evaluation has sparked little interest in the educational research community, probably because they are still scarce and lacking major in-the-field exploration and practice (Janichon, 2014). Despite its 2006 decree, the French Ministry of Education did not produce orientations to its implementation before 2010. Only after this date, the concept has started to descend to schools and classrooms.

Dell’Angelo (2011) and Loisy et al. (2014) studied how different competency-based evaluations were implemented in three educational academies of France. Their results showed that the majority of evaluated skills are general skills and few of them are specific competencies; also, attitudes are rarely listed as competencies, if at all, for validity of construct reasons, as teachers indicated that they have serious questioning about the possibility of evaluating an attitude in class (Janichon, 2014); finally, they mention the difficulty of evaluating several skills and competencies for each student at the same time during the class time (Dell’Angelo, 2011). In reference to the concrete act of measuring competency, Crahay (2006) states that what complex tasks really measure is not the result of the practice of competency but the ability to make the right decision: a mysterious decision-making process that doesn’t have anything to do with competency training. Finally, Rey et al. (2006) points at the risk of promoting a behaviorist conduct due to the diversity of existing criteria for each competency. In the 2010 decade, it has timidly started to be one of exploring tools and pedagogical practices to replace the traditional time-based pedagogy. At the time and place of this study (2013-2014), none of the teachers of the semi-rural high school had yet approached competency-based pedagogy in their respective classrooms.

The design of this study is a correlational longitudinal panel of a school cohort in Life and Earth Sciences high school sophomore class. Each week, from September 2013 to June 2014, the teacher registered the skill performance of its 140 students during the complex activity.

The participants of this study were sophomores (n=140) of a public senior high school in a semi-rural area with high unemployment rate (approximately 20%), especially among the 15-24 years old range (approximately 31%), of north-western France. With a slight female gender majority (59,3%), students’ mean age was 15,47 (sd=0,8) years old. They were distributed in 8 groups of 18, with no beforehand selection, and all started and ended the school year at the same time (except for 4 cases). Once the research question set, the following exclusion criteria were applied to the data: if a student had missed more than 40% of skill evaluation in the whole year, or 12 evaluations in the first 6 weeks, or 6 evaluations in the last six weeks, the case was excluded. After applying filters, the number of cases was reduced to 117 students (n=117) with a mean of 14,63 students per group (sd=2,49). Students were always encouraged to work in groups, yet could also work individually, and had the opportunity to ask for assistance during class time. This investigation respected the anonymous character of the students’ information according to the statistical secret.

The competency-based evaluation requires the implementation of the complex activity, also called complex task, as it measures the competency of using combined skills and attitudes to resolve a particular situation. The complex task model typically follows this framework: first, a situation is described (context, timeframe, and key elements), then the problem to be resolved is stated by a one-phrase clear phrase, and finally the available resources to solve the problem are listed as well as the list of skills and attitudes that are to be evaluated. All tasks expect an end-product to be done individually.

During the first trimester of this investigation, each skill had detailed criteria associated to it on the instruction sheet (for example, the criteria for the skill “Using an optical microscope” are “Smaller lens set; sample centered on the platform; light on; macrometric and micrometric focuses”). After the first trimester, all criteria were removed from the instructions sheet, yet still permanently available to all in the methodology class book, as suggested by Perrenoud (2015). This complex task model respects the institutional framework of competency evaluation (MEN, 2011) and was validated by the regional pedagogical supervisor of Earth and Life Sciences during the 2013-2014 school year. The selected skills and attitudes for the 93-94 school year are presented in table 1 and the K10 Life and Earth Sciences curriculum topics in table 2.

Table 1

List of selected competency categories and skills for K10

Skill categories |

Skills |

No.** |

Accessing scientific information [I]* |

Identifying, retrieve and organize information from texts, graphics, tables, diagrams (1) |

1 |

Using observing and measuring techniques [Re]* |

Following a set protocol |

2 |

Manipulating with laboratory materials |

3 |

|

Using an optical microscope |

4 |

|

Measuring with instruments |

5 |

|

Adopting the scientific method [Ra]* |

Linking information from different sources |

6 |

Evaluating, interrogating, questioning |

7 |

|

Formulating hypothesis |

8 |

|

Arguing, conceiving strategy |

9 |

|

Creating, concieving a model |

10 |

|

Communicating results and conclusions [Co]* |

Using scientific vocabulary |

11 |

Presenting results in a written report or diagram |

12 |

|

Presenting results orally, graphically, or numerically |

13 |

|

Science-related computing [MI]* |

Using a general software |

14 |

Using an applied software |

15 |

|

Using computer-assisted experimentation |

16 |

|

Being engaged in the learning process [As]*

|

Working in groups |

17 |

Demonstrating curiosity, interest |

18 |

|

Demonstrating responsibility for materials |

19 |

|

Demonstrating responsibility for one’s health and for others |

20 |

Note: * Letter code assigned to this skill category from now on in this article ;

** Number assigned to each skill

-----

Table 2

Curriculum topics covered and corresponding evaluated skills per week

Week |

Curriculum Topic |

Evaluated skills* |

1 |

Ecosystem diversity |

1,12 |

2 |

Species diversity |

1,6,14 |

3 |

Genetic diversity |

2,3,10,12 |

4 |

Causes of biodiversity transformation |

1,12,17 |

5 |

Types of living cells |

3,6,11,12,14,15 |

6 |

Variety of cell metabolisms |

1,2,6,9,11,12 |

7 |

Genetic supporting molecule |

1,9,10,12 |

8 |

Genetic variation |

7,8,9 |

9 |

Main families of organic molecules |

1,1,2,6,8 |

10 |

Earth characteristics for living organisms |

1,9,12 |

11 |

Habitable zone |

1,9,12 |

12 |

Photosynthesis |

2,12 |

13 |

Soil components |

1,1,2,3 |

14 |

Water cycle |

3,9,12,17 |

15 |

sustainable agriculture challenges |

1,12,15,17 |

16 |

Coal formation |

1,4,8,9,12 |

17 |

Carbon cycle |

1,1,7,8,12 |

18 |

Renewable energies |

1,9,15,12,17 |

19 |

H2O and air cycles |

1,3,8,10,12 |

20 |

The Sun as the driving force |

1,3,8,10,12 |

21 |

O2 intake and cardio frequency |

1,2,5,9,12 |

22 |

Maximum oxygen intake |

1,2,5,9,12 |

23 |

Heart anatomy |

1,2,4,9,12 |

24 |

Heart Pressure |

1,1,2,3,15 |

25 |

Body systems integrated work |

2,2,6,12 |

26 |

Sports and accidents |

1,1,3,12 |

27 |

Muscle cell contraction |

1,6,12 |

28 |

Physical exercise and health |

1,12 |

29 |

Sports and doping |

9,13 |

Note: * each number corresponds to a skill listed in table 1

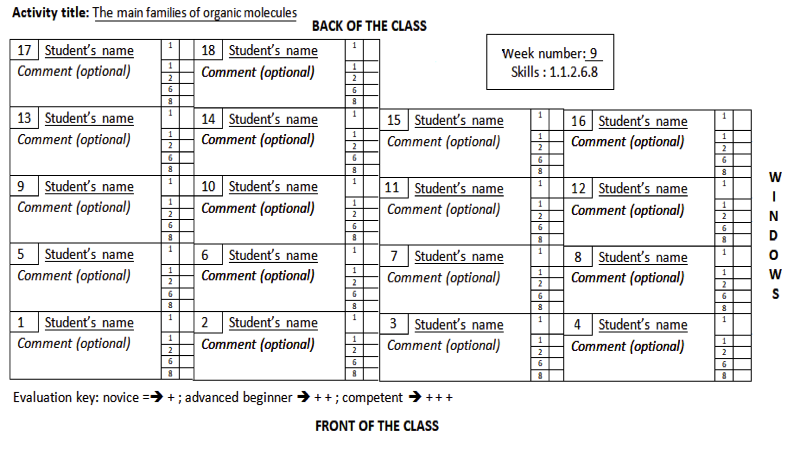

Since the competency evaluation instrument was applied by the teacher during the classtime, as suggested by Rey (2012), its format had to be practical and easy to complete, as shown in figure 1. Each week, four to six skills were evaluated for each student based on a three-level skill acquisition appreciation scale, inspired from the Dreyfus model of skill acquisition (Dreyfus, 2004) and simplified as such : novice, which describes an incomplete understanding of the skill and a major supervision to complete it; advanced beginner, which describes a working understanding of the skill and a minor supervision to complete it; and competent, which describes a good working and background understanding and the ability to complete skill independently.

Figure 1

Skill evaluation instrument

For each student, data collection was done once a week from September 2013 to June 2014 through the observation of his or her performance by the teacher during the main class activity. Measurements started on the second week of class, as to let students and teacher time to get acquainted to each other during the first week. Skill evaluations occurred weekly, yet when a student chose not to perform one of the listed skills, or unpredicted reasons prevented its implementation, evaluations were not registered.

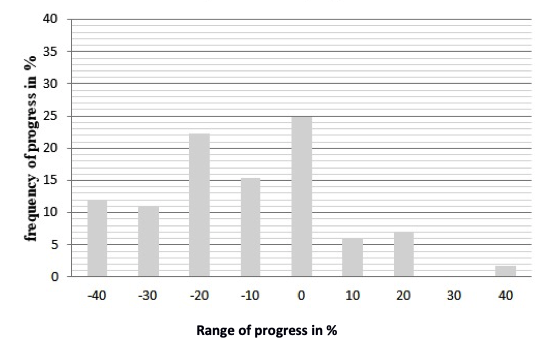

The first part of the results section explored the data considering individual students as analysis units. To answer the first question (i), i.e. to establish if students were globally more competent at the end of the year than at the beginning of the year, the variable “Global Progress” was created, defined by the subtraction of the total number of acquired skills for the first 21 evaluated skills (C0) to the total number of acquired skills for the last 21 evaluated skills (Cfinal), as such: “Global progress” = [Cfinal] – [C0], with [Cfinal]-[C0] > 0 showing progress. It is important to note that, since progress from week seven to week twenty-three is not being considered in the equation, we were not looking here at longitudinal results but rather at a picture of an ‘absolute’ progress. Results are displayed on a bar graph with frequency of progress on the y-axis distributed in ten-percent ranges of progress on the x-axis.

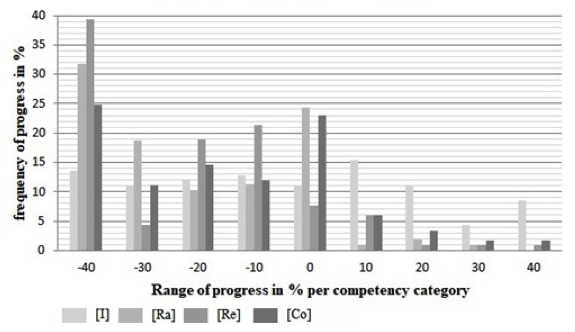

To answer the second question (ii), which aimed at verifying if skill categories tended to show the same pattern as the “Global progress” variable, we looked at the progress for each skill category (listed in table 1) and named the variable “Progress per skill category”. It was created by subtracting the total number of acquired skills in each skill category among the first 21 evaluated skills, called M0, to the total number of acquired skills among the last 21 evaluated skills, called Mfinal, as such “Progress for each category” = [Mfinal] – [M0], with [Mfinal]-[M0] > 0 showing progress. The science-related computing skill category was not considered here for it was only evaluated once during the las 21 evaluated skills. Results are displayed on a bar graph with frequency of progress on the y-axis and the four skills categories, namely “Accessing scientific information [I], “Adopting the scientific method” [Ra], “Using observing and measuring techniques” [Re] and “Communicating results and conclusion” [Co], on the x-axis distributed in ten-percent ranges of progress.

As for the third question (iii), which explored if factors like scientific profile, gender and team work could possibly influence skill acquisition, we created three more variables to be correlated with the “Global progress” variable, namely the “Scientific Profile” variable, based on students’ first trimester GPA in Maths, Physics, Chemistry and Life and Earth Sciences classes; the “Gender” variable, based on the binary option of boy or girl; and finally, the “Team work” variable, based on the total of acquired “working in group” capacity (listed as skill 17 in table 1). The distribution of scientific profiles within each group was tested for normality using a Kolmogorov-Smirnov test (Maths [K/S=0,68; p=0,2], Physics and Chemistry [K/S=0,47; p=0,2] and Life and Earth Sciences [K/S=0,95; p=0,13]).

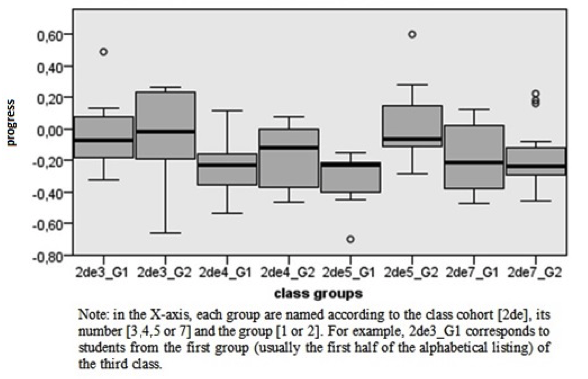

To answer the fourth and last question (iv), the data was explored considering the groups of students as analysis units. To level full groups with the ones having case exclusions, the analysis units considered 15 students per group and were named after the class cohort (2de), the class number (3, 4, 5 or 7) and group number (G1 or G2). The variable “Global progress per group” was created using the same evaluated skills as in the first question and then compared using a one-way ANOVA test. Results were displayed in a box plot to visualize progress between groups and progress distribution within groups, the standard deviation within groups being an indicator of the group’s homogeneity or lack of homogeneity. It finally looked to explore the correlation between “Global progress per group” and the group scientific profile, based on the group scientific GPAs (constructed similarly as the “Scientific Profile” variable), and the group capacity of working together (constructed similarly as the “Team Work” variable). Data was analyzed using SPSS software in its 25.0 version.

The results displayed in Graph 1 reveals that the vast majority of students (85%) do not register global progress in scientific skills throughout the year, 60% of them showing a decrease in scientific skills acquirement. Only 15% of students seem to improve their skills

Graph 1

Global progress

The high frequency of students (over 30% of them) that lowered their score of skill acquisition under the 20% line is striking. Instead of the expected improvement or mere stagnation, there is a manifest decrease in skill acquisition. This will be discussed in a latter section as it obviously leads to the interrogation on what could be the cause of such results, whereas it is teacher-related, student-related, skill evaluation-related, or a combination of them.

3.2. Do students improve in all skill categories or only in specific ones?

Graph 2

Progress per skill category

Note: the skill categories are listed in table 1

We can observe that the four different skill categories do not follow the same pattern: the first skill category, labelled [I] and which evaluates skills related to “accessing scientific information” shows less decrease in skill acquisitions than the other three categories. It is also, compared to the other categories, the most susceptible to reach a positive value of progress. On the other hand, the highest percentage in the decrease of progress (-40% progress range) belongs to the skill category which evaluates “using observing and measuring techniques”, meaning students had more difficulties with manipulation and experimentation at the end of the year than at the beginning of the year. It is closely followed by the “Adopting the scientific method “ skill category and the “Communicating results and conclusions” skill category.

The scientific profile was weakly positively correlated [r(115)=0,166; p=0,077] to progress, as well as the students’ individual capacity of working in groups [r(117)=0,099; p=0,286] to an even lesser extent. Yet, the large value of “p” raises doubts about the statistical significance of the test results. Finally, progress average gives a slight advantage to the boys (M=0,18; SD=0,18) compared to the girls (M=0,11; SD=0,25), yet the interesting difference between the two groups comes from the standard deviation: girls occupy the extremities of the surface area, while boys tend to cluster closer to the middle, meaning that boys represents a more homogeneous group than girls in terms of competency learning. As individual factors that could predict improvement, it doesn’t seem that scientific profile, capacity of working in team, or gender bring any significant advantage nor disadvantage.

Significant differences in the progress distribution between groups can be observed [H=25,401; gdl=7; p<0,01], yet the distribution of progress per skill category is relatively the same for each group [K/S, p<0,05].

Graph 3

Global skill progress group comparison

Here it seems that belonging to a specific group has an impact on progress. This answers the last question about group tendencies to improve. The most heterogeneous groups are 3 (m=11,56; SD=2,99), 4 (m=11,16; SD=3,75), 5 (m=12,04; SD=2,92) and 6 (m=10,73; SD=3,12). Scientific profile of the group was negatively correlated to the progress of the group (r(8)=-0,02) and to group dynamics (r(8)=-0,11), using the standard deviation of the scientific profile as a variable.

It is interesting to note that progress is neither correlated to individual scientific profile nor to group scientific profile. Yet the capacity of the group to work together was weakly positively correlated to progress (r(8)=0,06).

Before concluding, it is of upmost importance to mention the limits of this study. It is important to note that if the evaluation model remains the same during the whole study, the skills and competencies measured varied along the way. Not all skills and competencies demands equal cognitive operations and not all contexts have the same degree of familiarity or unfamiliarity to students. As far as the instrument design, implementation and measurement procedures, it is important to note that in this study it is the same person that teaches and evaluates. Teachers’ expectations, as well as teachers’ lack of expectations, might have a biasing effect. In this particular study, an informal interview with the teacher at the end of the year revealed that she actually believed competence-based evaluations to be an effective way to engage students in their learning processes, having a very positive outlook about this year-long experiment. Finally, another unneglectable threat to this study is the participants’ maturity threat: indeed sophomore students were fifteen and sixteen-years old adolescent with natural changes during the year, and some groups, in particular, faced and dealt with difficult situations such as a classmates´ depression, school drop-outs, domestic violence episodes, and poverty cases.

First, the results of this study invalidate the “bike-riding” hypothesis and make it clear that a wide range of factors intervene in competency acquirement, such as the topic and belonging to the group. Insights on these factors and how much they influence competency acquisition could be and should be qualitatively investigated. The interpretation of the context seems to have a key role to play on skill acquisition, in part because each person interprets and reconstructs the situation based on its own realities. This has an important consequence on evaluation because reasons about why students that do not achieve skills might have its source in different interpretations of the situation, rather than cognitive problems.

Second, the results show the complexity of the relation between competency and evaluation. It points out at one critic done to competency-based evaluation from Marcel Crahay (2006), who states that what is really being measured here is not the result of the actual ‘practice of competency’ but the mysterious ability to make the right decision. To Crahay, it favors students with inherited intelligence or with high inherited cultural capital. Nevertheless, in this study, the correlation between a high scientific profile and progress was not statistically proven. Yes, it is interesting to report here that the teacher informally interviewed students about their perception of the competency-based evaluation model during the last class at the end of the year. Students’ opinions diverged significantly from “much harder” to “much easier” evaluations compared to the traditional ones. However, after interviewing all the groups, the teacher systematically observed that the high scientific profile students tended to find the competency-based evaluation harder, and the low scientific profile students tended to find it easier.

It is hard to conceive that students could become less competent at the end of the school year than at the beginning, unless we examine the concept of competency and the real feasibility of its evaluation. The second part of this study examines students’ performance on category items, so as to determine if a certain type of items (inside a category) is more easily achieved than others. If today competency-based education have not succeeded in making the major educational transformation it had predicted, it is clear that the only way for it, not to become a missed opportunity, is to consolidate the research field of its classroom implementation.

Bertemes, J. (2012). L’approche par compétences au Luxembourg. Une réforme en profondeur du système scolaire. In J.L. Villeneuve (eds.), Le socle commun en France et ailleurs (pp.888-999). Paris : Le Manuscrit.

Crahay, M. (2006). Danger, incertitudes et incomplétude de la logique de la compétence en éducation. Revue française de pédagogie, 154, 97-110.

Dell’Angelo-Sauvage, M. (2011). Les tâches complexes et l’évaluation de compétences dans l’investigation. APBG, 4, 131-147.

Dreyfus, S. (2004). The Five-Stage Model of Adult Skill Acquisition. Bulletin of Science Technology & Society, 24(3), 177-181.

Hoffman, T. (1999). The meanings of competency. Journal of European Industrial training, 23(6), 275-286.

France. French Ministry of Education [MEN], 2011. La mise en œuvre du socle et l’évolution d’une discipline, les sciences de la vie et de la Terre. Retrieved from http://media.eduscol.education.fr/file/socle_commun/47/6/Socle_SVT_mise-en-oeuvre_178476.pdf

France. French Ministry of Education [MEN], 2006. Socle commun de connaissances et de compétences. Décret n° 2006-830 du 11 juillet 2006, paru au J. O. du 12 juillet 2006. Retrieved from http://cache.media.education.gouv.fr/file/51/3/3513.pdf

Janichon, D. (2014). Évaluer des attitudes, un défi pour le socle commun. Évaluation diagnostique de la cohérence entre compétences sociales et compétences d’autonomie. Les sciences de l’éducation – pour l’ère nouvelle, 47(4), 104-114.

Loisy, C., Coquidé, M., Prieur, M., Aldon, G., Bécu-Robinault, K., Kahn, S., Dell'Angelo, M., Magneron, N., and C. Mercier-Dequidt, C. (2014). Évaluation des compétences du Socle commun en France: tensions et complexités. In C. Dierendonck (eds), L'évaluation des compétences en milieu scolaire et en milieu professionnel (pp.257-267). Louvain-la-Neuve, Belgique: De Boeck Supérieur.

OCDE (2005). La définition et la sélection des compétences clés. Retrieved from https://www.oecd.org/pisa/35693273.pdf

Perrenoud, P. (2015). La evaluación de los alumnos. De la producción de la excelencia a la regulación de los aprendizajes. Entre dos lógicas. Buenos Aires: Colihue.

Rey, O. (2012). Le défi de l’évaluation des compétences. Dossier d’actualité Veille et Analyse, 76. Lyon : ENS de Lyon.

Rey, B. Carette, V., Defrance, A. and Kahn, S. (2006). Les compétences à l`école. Apprentissage et évaluation. Bruxelles: De Boeck.

Watson, R., Stimpson, A., Topping, A. and Porock, D. (2002). Clinical competence assessment in nursing: a systematic review of the literature. Journal of Advanced Nursing 39(5), 422-436.

Weinert, F. E. (2001). Concepts of Competence – A Conceptual Clarification. In D. S. Rychen and L. H. Salyanik (eds.), Defining and Selecting Key Competencies (pp.45-70). Göttingen: Hogrefe und Huber.

1. PhD candidate on high school discrimination and inclusive education at the Educational Sciences Department, Universidad del Bío Bío, Chillán, Chile. Owner of a Conicyt fellowship. Co-founder of the http://www.svt-egalite.fr (advocacy for discrimination-free pedagogy of science), pdelbury@cdegaulle.cl